In this guest blog Dr Alexander Eriksson discusses the current state of development of the sensor technology needed to support autonomous vehicles.

Dr Eriksson is a visiting researcher at the University of Southampton, a researcher at the Swedish National Road and Transport Research Institute, and Competence Area Leader for Driving Simulator Applications at the SAFER Vehicle and Traffic Safety Centre at Chalmers, Sweden.

While regulation of the construction, equipment and use of motor vehicles is not devolved to Wales, the Economy, Infrastructure and Skills Committee will be looking at the implications of autonomous vehicles for Wales on 23 May. This blog provides an insight into the development process for the technology and some key issues still to be resolved.

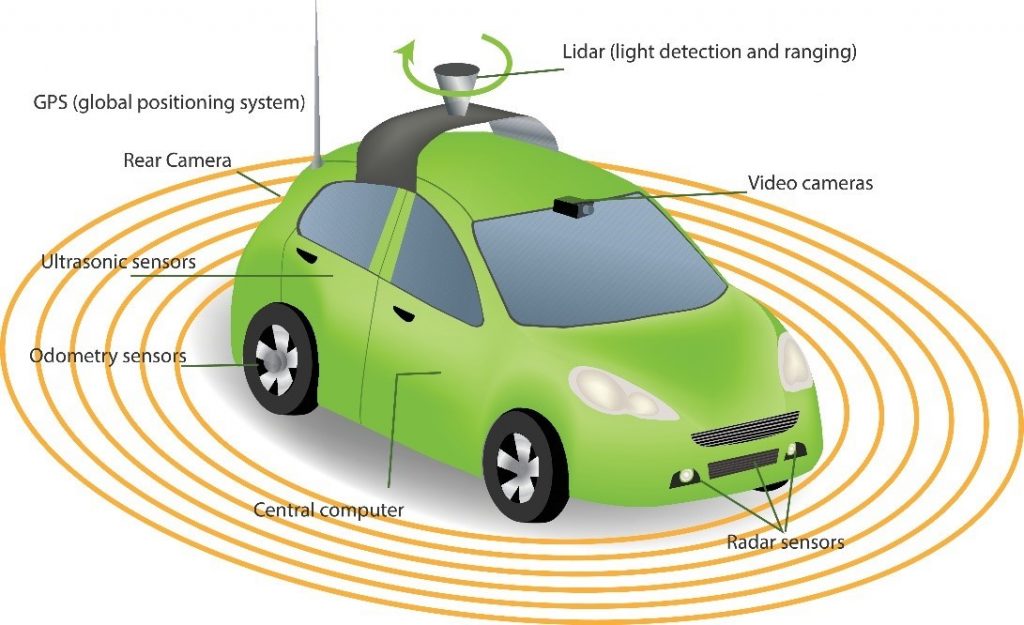

Automated vehicles are currently a hot topic in the automotive, research, regulatory and law-making domain, predicted to reduce accident rates significantly as automated vehicles reach the market. It has been estimated that human error causes or contributes up to 90% of accidents on the roads. To achieve a reduction in crashes, engineers are developing advanced software to drive the vehicle without a driver. To their aid they have a vast array of sensors, including RADAR, light emitting detection and ranging devices (LIDAR) and conventional cameras such as those found in mobile phones.

What are the technology challenges?

The advent of this technology comes with several challenges. For instance, the sensors used in automated vehicles are unlikely to work effectively if used individually as they each have limitations. Additionally, provided that the development of automated driving technologies continues as it has to date, the wide-spread use of different sensors, and underlying computer software, may turn out to be a policy-making nightmare!

Radar

Interference has been a recent concern if automotive RADARs are widely deployed for driving automation applications. As most contemporary RADAR systems operate in the same frequency band (76-77 Ghz), there is concern that there may be cases of interference between vehicles equipped with automated driving systems using multiple RADARs, and that the number of vehicles equipped with such technology is on the rise. This interference could increase the computing time needed due to an increase in what is known as the signal to noise ratio (the level of a desired signal to the level of background noise), and even give rise to “ghost targets”, showing obstructions where there are none.

LIDAR

LIDAR devices are the current bread and butter of sensors in highly automated driving applications. Traditionally, LIDAR sensors use a rotating set of mirrors or prisms to generate a ‘point cloud’ of laser beams to gather information about their surroundings. These sensors provide accuracy to within a couple of centimetres and boast a range of approximately 100 meters.

However, these sensors are sensitive to what is known as “atmospheric dampening”, as the laser beams are smaller than water droplets, snow, fog, and dust particles which increase the “signal to noise ratio”. This effectively limits the range of LIDAR to a range similar to human vision in these conditions.

A further issue with LIDAR sensors was revealed in 2015, when security researcher Jonathan Petit managed to hack LIDAR sensors and introduce fake data. Jonathan was quoted stating “I can take echoes of a fake car and put them at any location I want”. He further stated that, “I can spoof thousands of objects and basically carry out a denial of service attack on the tracking system so it’s not able to track real objects”. All this was accomplished through cheap hardware (such as a raspberry pi, or an Arduino, and a laser pointer), which indicates that such vulnerabilities may be a target for those wishing to disrupt self-driving technology.

Camera technology

Camera technology has long been a vital component for driving automation features in vehicles. Camera based sensors are crucial to ensure that the vehicle positions itself correctly in the lane and, using stereo cameras, the vehicle is also able to gauge depth information from the image. One of the early applications of cameras in automotive automation was by Carnegie Mellon University in the mid-90s using a single-camera system with relatively low resolution (440x525 tv-lines), allowing the vehicle to position itself in the lane. Nowadays contemporary vehicles often use two or more high-resolution cameras, with Tesla using eight. The use of two cameras comes with some drawbacks as the computing power required to process the imagery increases with a stereo camera system. However, the software and algorithm complexity is lower than in a single-camera system, which may mean that stereo camera systems are more reliable and simpler to implement, despite requiring more computing power.

Sensor fusion

“Sensor fusion” is the solution to overcome the limitations in each of the individual sensors by “fusing” the data from multiple sources and cross-checking the results from each sensor. This approach also ensures redundancy, should one sensor fail there are still sufficient data to maintain safe operations until a driver can step in. The redundancy approach is commonplace in, for example, the aerospace industry where airplanes have multiple hydraulic lines, flight computers and sensors. The drawback is that it requires vehicles to be fitted with several sensors, which may increase the cost of vehicles significantly. Additionally, it has recently been shown that the gains in fuel economy and environmental impact of automated vehicles are dwarfed by the vast power requirements for making sense of the data coming from the sensors. Thus, work is needed to determine a suitable suite of sensors to achieve automated driving. This seems to be an effort that each manufacturer is making in isolation, as shown by the different sensor arrays used by the different manufacturers.

Testing brings its own challenges!

There are also several issues still to be addressed in testing the sensor technology needed for autonomous vehicles. As these functions are being added to vehicles and used in applications that are safety critical (for example, when interacting with pedestrians, bicycles or near-crash scenarios), the difficulty of verifying and evaluating these functions in a safe way increases. For instance, to test a safety or automated driving feature the test engineer must provoke the system to its sensor or system limits, which may result in dangerous situations. Additionally, if such a system were to be assessed for the ‘average driver’ or, even better, a wide range of drivers of different abilities (5th-95th percentile), several non-professional drivers would have to be put at risk, or complicated scenarios set up on test tracks. There are several solutions to tackle these issues, such as driving simulation and real-world testing, each with their advantages and drawbacks.

Simulation and real-world testing on test-tracks and in traffic should be part of laying the ground for ‘vehicle type approval’ by governments. Recent estimates have shown that autonomous vehicles must be driven between 1.6 million and 11 billion miles, taking up to 500 years for a fleet of 100 vehicles, to statistically assess the safety of automated driving technology.

Figure 1 Potential sensor suite of an automated vehicle. Image credit: Mostphotos